A headless CMS is very similar to a database. It provides an API and user interface to manage your content of course you can also retrieve the content using. In almost every use case you want to apply a filter to only query the content items you actually need. The question is: How fast are these queries and filters?

The problem

In this article we describe the problem using an example project: Our goal is to build a travel website where the user can search for hotels. For each hotel we also store the name of the location (city). Our website has a one landing page per city where we show the hotels in this city and we also provide a search where the user can enter a location. Of course we only want to show the hotels in selected location and therefore we need to apply a filter before we display the hotels to the user. If we have only very few hotels (lets say 50) we can just fetch all hotels from the headless CMS and then reduce our result set to only the hotels in the current location. But if we scale our business this is not the appropriate solution. The expedia hotel database has more than 300.000 hotels and if we assume that each hotel has 100kB of text, we would have to download 30GB of data in each request. Therefore we must apply the filter as early as possible.

Fortunately many headless CMS provide filtering in their API but the question is: How fast is this?

Somehow the CMS needs to store your content somewhere and usually they either store your content directly on a disk or in database, which also persists the content on a disk. Usually this is a SSD, which makes everything much faster than a hard disk. In this example we assume that the headless CMS stores everything on disk, because it makes our example a little bit easier and we can also apply the same techniques that a database engine would do. To get the matching hotels we have to loop over all the hotels in our database file and test if the a hotel meets the given location or not. In pseudo code it would look like this:

These kind of queries are called table scans or collection scans, because we have to scan all records.

If we consider that we have a state of the art SSD we can read the data with 2000MB/second, so it would take around 15seconds to fetch and scan the hotels. Of course it is not that simple:

- Very often we can just stop our search if we have enough hotels, but if we need the hotels for a very small village there is a chance that we have to loop over all hotels. If the user enters a location where no hotel exists at all we definitely have to loop over all the hotels.

- We can also compress the data before we store them to the disk. It depends how good the compression rate is but it it could be around 70% for our hotel database. Therefore save a lot of disk space. But of course we also have to decompress the data when we read it, which puts more pressure on our CPU.

- When we read our hotels we have to convert a list of bytes to an structure that we can analyze. This is called deserialization and also takes time.

- In a big datacenter the disk is not on the same machine as the headless CMS and therefore we read the data over the network which makes it slower.

- Our users have to share the disk performance with other users who also want to view hotels. Therefore our query is much slower.

Overall it is hard to estimate the duration of a query, but usually it is very slow. If we only have one user and our code and servers are very fast we can perhaps deliver the results in 30 seconds, but it is very likely that our query takes several minutes to complete. Some systems also have an upper limit how long a query could take and the query would just timeout and do not deliver a result even though there is one in database. Of course this is not acceptable and have to find a better solution.

Solutions

Restrict the use case

One solution is not to allow such big data sets. For example contentful [1] only allows 25.000 (or 50.000 records) per project. GraphCMS [2] has the same limitation. Of course this makes our queries much faster. Because we also want to store other content, we probably have not more than 10.000 records per content type. Filtering 10.000 hotels is of course 30 times faster than filtering 300.000 hotels, but is also means that you cannot use a headless CMS for our use case. But there is a better solution:

Indexing content items

The reason why our queries are so slow is that we have a lot of hotels and that each hotel is very big. If we could store the hotels in the memory it would be much faster, but to do that we have to reduce the size. For our query we are only interested in the location of the city, so we can optimize our headless CMS by keeping a an optimized data structure in memory that only contains the ID and location for each hotel. As a table it would look like this:

In pseudo code it would look like this:

If we just say that each location has around 10 characters (which needs 20 bytes) and the ID takes another 4 bytes our index needs around 7MB and looping over only 0,2% of the data will perform much better. 20MB is perhaps more realistic but you get the idea. We can also use other data structures such as hash tables or a binary tree for our index and boost the performance even more. Therefore we are able to identity the matching hotel IDs in less than one millisecond and after fetching the full hotels from the disk and delivering them over the API over the internet we can provide the results in 100 milliseconds.

In some cases we can also use the index even though it does not cover all fields of our query. If we also filter by the rating and want to have all five-star hotels in a location we can get the results with the following pseudo code:

In this example we have to fetch a lot of hotels from the disk that we don’t need (4 stars or less), but at least we do not have to fetch the hotels from other locations.

We have a strategy now to boost our performance but there are a few problems:

- We need to know upfront which queries we make to create the appropriate indexes. This also changes over time when more features and search capabilities should be added to our website.

- We cannot add every field to an index, because this would make our index as large as our original records and we would loose the advantages of an index. It would also consume too much memory.

We have 2 options now to provide indexes to our users:

Option 1: Provide a user interface for developers to create indexes.

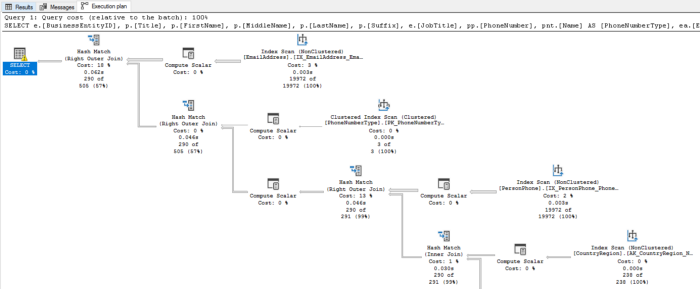

If we use a database in our headless CMS we could expose the functionality to create indexes for developers. This is complicated because to create the appropriate index we have to analyze our queries first. Database engines provide tools for this and you can analyze complex diagrams to understand what actually happens under the hood when an query is executed:

image

imageThere is no alternative to these diagrams and therefore the headless CMS has to provide the same functionality. This adds a lot of complexity to the system and does not really solve the problem. The first issue is that sometimes we have to change our content structure to use indexes. For example you also need an index for sorting. In older versions of MySQL (a populate database engine) it was not possible to sort in descending order using an index. For example if you want to show a list of products with highest price first. A solution was to maintain an additional field with an “negative price”, e.g.

nevativePrice = price * (-1)

The negative price is than lower the higher the price is and you can sort by the negative price in ascending order to show the highest price first.

Creating the correct indexes needs a lot of detailed knowledge about the used database and is not a task for a Junior Developer. As a headless CMS developer we also risk to expose details about the underlaying database to our users and we increase the coupling because we cannot switch to another technology that easily.

Option 2: Optimize queries with automated indexes

We can also create indexes on the fly by analyzing queries. Our headless CMS stores which fields are used in our queries and how often they are executed. If an query is very slow and is used very often we create an index for that. Managing indexes is not easy for a developer and much harder if we want to automate the process. Because each index consumes resources just to keep it in memory and also to update the index when new records are added to the headless CMS, we also have to destroy indexes when they are not needed anymore. If also means that our website might be very slow at the beginning and becomes faster over the time which is not always acceptable, because we want to have a great experience for all users.

Both solutions are problematic because an index consumes a lot of resources and if we allow the system or the user to create indexes on the fly it puts a lot of stress to our database. This only works if we create an isolated database server for each customer, which is expensive. This can only work if you provide a hosted headless CMS solution for of 500$ or more per month. So it might be option for GraphCMS and Contentful but not for Squidex.

Working solutions

There are also working solutions:

Offloading the problem

We have seen that it is not possible for headless CMS to provide fast queries or that it is just to expensive. Therefore the solution is to offload this problem to another service. All headless CMS solutions have support for Webhooks. A webhook is a piece of code that is triggered on an external service whenever something happens in a system. For example you create a webhook so that new content items are automatically sent to specialized search services. For example to algolia or Elastic Search. These services offer a lot of tools and options to optimize your search experience for your users and you can also transform your content items before you sent them to a search service to get rid of fields that you do not need for the search, which is a great and simple optimization. You have to really understand these services and you have to make the necessary optimizations, but usually these services just work when you get started with your new project.

Self-hosting

If we do not use a SaaS offering and host the headless CMS ourselves we have full control over the database and can create the indexes that we need. It is still a task for a Senior developer but if we built everything from scratch and do not use a headless CMS we also have to optimize our queries.

Are all queries slow?

Usually a query is relatively slow because there is no index. But there are a few exceptions:

- Full text search: A full text search always needs an index. It is just not possible to provide this functionality without an index. Therefore it usually very fast.

- Geolocation searches are often provided with an index as well because some database engines do not provide this feature otherwise.

- Default queries are usually optimized. The headless CMS provides a few features that are very prominent in the user interface. For example to get all content items with that are published or archived. These queries are static and therefore the headless CMS provider can optimize them with a custom index.

Conclusion

As described above it is complicated to provide fast queries for all use cases, if not impossible. But there are a few things you can do:

- Do not use a headless CMS for all data. Especially if you have a lot of automatically created records or imported records a normal database is a better solution.

- Hire a good developer who can optimize your queries.

- Cache your results using a simple in-memory cache or a CDN.

- Choose a specialized search solution for your queries or select a CMS that you can host yourself or just pay a lot of money.